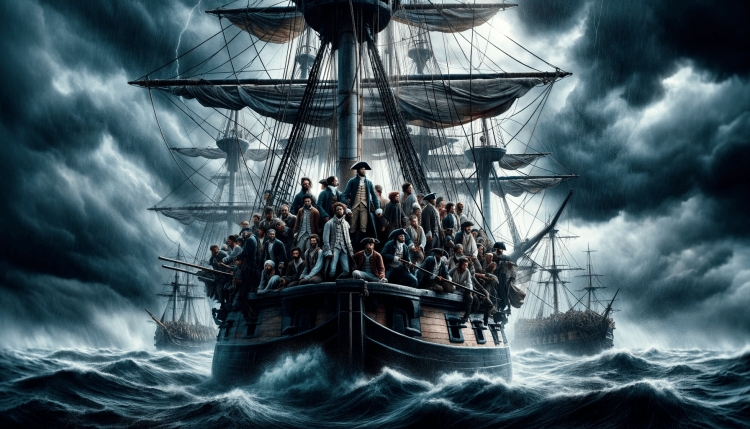

Vacation, shmacation — I might be gallivanting across the pond right now on PTO, but how could I not tackle the Mutiny on the Bounty (exclusive me, Board) that’s going on right now at OpenAI?

Have you heard the latest — that more than 500 OpenAI employees signed a letter saying they may quit and join Sam Altman at Microsoft (which was the latest news before this latest news, which came after the previous latest news about all those heart emojis) unless OpenAI’s nonprofit board resigns and reappoints Altman?

The Turmoil at OpenAI

Last Monday, I published a story about how OpenAI’s six-member board had the power to decide ‘when we’ve attained AGI.‘ I wrote about OpenAI’s nonprofit structure, which several lawyers I spoke with called “unusual,” which wielded power over the for-profit side of the company, and the several board members with ties to the Effective Altruism movement.

It was a whirlwind day on Friday where the OpenAI board fired its CEO Sam Altman, replaced him in the interim with chief technology officer CTO Mira Murati, and president Greg Brockman was removed as chairman of the board and then quit after Altman’s ouster.

In that post, I pointed out that in the context of my original piece, it made sense to me that OpenAI’s nonprofit board — whose mission was to focus on AI safety concerns, not commercialize products — would be at the heart of Altman’s ouster and Brockman’s removal from the board. The Information had reported that when board member Ilya Sutskever, OpenAI’s chief scientist, said that “this was the board doing its duty to the mission of the nonprofit, which is to make sure that OpenAI builds AGI that benefits all of humanity.” Of course, then all hell broke loose.

A Company in Flux

After OpenAI’s board received massive blowback from investors including Microsoft, discussions suddenly began to…you guessed it, bring him back. There was the photo of Altman wearing a visitors badge to company HQ on Sunday. There were the hundreds of OpenAI employees posting heart emojis indicating they were on his side.

A few hours later, Microsoft CEO Satya Nadella announced that Altman and Brockman would join the company to head a new advanced AI research unit and seemed ready to welcome any OpenAI employees ready to jump ship. But that’s not all — in another head-spinning move, the same OpenAI’s board that kicked Altman to the curb suddenly removed Murati and appointed yet interim CEO, Emmett Shear, the former CEO of the video game streaming site Twitch.

This seemed to be a last straw: Wired’s Steven Levy reported today that “some OpenAI staff stayed up all night debating a course of action following news that Altman would not return to OpenAI. Many staff were frustrated about a lack of communication over Altman’s firing. Dozens of employees appeared to signal their willingness to jump ship and join Altman last night by posting “OpenAI is nothing without its people.” on X. In their letter, the OpenAI staff threaten to join Altman at Microsoft. ‘Microsoft has assured us that there are positions for all OpenAI employees at this new subsidiary should we choose to join,’ they write.”

It seems ironic, to say the least, that the unprecedented actions of OpenAI’s nonprofit board — that took its AI safety mission so seriously that they would actually sack the CEO — would actually undermine its efforts by creating industry-wide uncertainty and fracturing trust with its employees, leading to those employees conducting their own mutiny and leaving OpenAI weaker, and likely less safe — at least in the short-term — as a result. Certainly the board’s mission to create artificial intelligence that benefits all of humanity has been slowed significantly.

Chief scientist Ilya Sutskever certainly seems to regret his actions, with a post on X this morning saying: “I deeply regret my participation in the board’s actions. I never intended to harm OpenAI. I love everything we’ve built together and I will do everything I can to reunite the company.”

Ultimately, OpenAI proved that for now, it remains humans that err and go rogue. The AI is fine, it seems — but the humans have a lot of work to do. Board governance may seem boring compared to building AGI, but in hindsight, it seems OpenAI — and their stated mission towards building safe AGI — would have been better off focusing on building a better board. Instead, it looks like it has a mutiny on its hands.