Researchers have made significant progress in vision-language models (VLM) that can match natural language queries to objects in visual scenes. However, applying these models to robotics systems poses challenges in generalizing their abilities. In a new paper by Meta AI and New York University, a novel framework called OK-Robot is introduced. It integrates pre-trained machine learning models, combining VLMs with movement-planning and object-manipulation models. This framework enables a robotics system to perform tasks in previously unseen environments without the need for additional training.

Challenge of Generalization in Robotics

Traditionally, robotic systems are designed for deployment in familiar environments. They struggle to adapt beyond locations where they have been trained, which becomes problematic in settings with limited data, such as unstructured homes. While individual components like VLMs and robotic skills for navigation and grasping have advanced, combining these models with robot-specific primitives still results in poor performance.

“Making progress on this problem requires a careful and nuanced framework that both integrates VLMs and robotics primitives, while being flexible enough to incorporate newer models as they are developed by the VLM and robotics community,”

– Researchers at Meta AI and New York University

The OK-Robot Framework

OK-Robot combines state-of-the-art VLMs with powerful robotics primitives to perform pick-and-drop tasks in previously unseen environments. The models used in this framework are trained on large, publicly available datasets. The framework consists of three primary subsystems:

- Open-vocabulary object navigation module

- RGB-D grasping module

- Dropping heuristic system

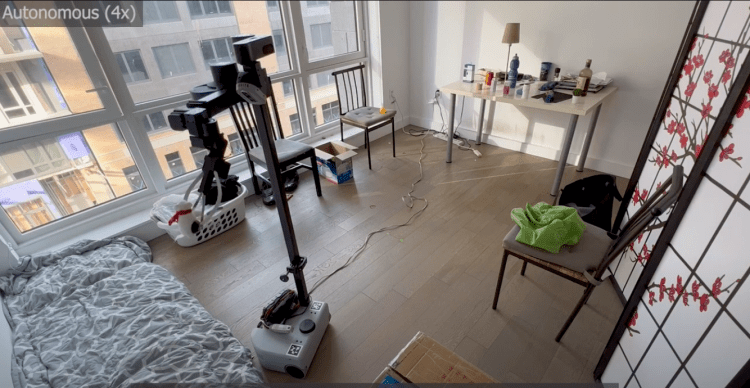

When deployed in a new home, OK-Robot requires a manual scan of the interior. An iPhone app captures a sequence of RGB-D images as the user moves around the building. These images, along with camera pose and positions, are used to create a 3D environment map. The framework employs a vision transformer (ViT) model to process each image and extract object information, which is then combined with environment data to create a semantic object memory module.

Given a natural language query for picking an object, the memory module computes the embedding of the prompt and matches it with the object that has the closest semantic representation. OK-Robot utilizes navigation algorithms to find the optimal path to the object’s location, considering the robot’s maneuverability in the environment. To pick the object, the robot employs an RGB-D camera, an object segmentation model, and a pre-trained grasp model. A similar process is followed for reaching the destination and dropping the object.

“From arriving into a completely novel environment to start operating autonomously in it, our system takes under 10 minutes on average to complete the first pick-and-drop task,”

– Researchers at Meta AI and New York University

Performance and Improvements

Researchers conducted experiments in 10 homes, running 171 pick-and-drop tasks to evaluate OK-Robot’s performance in novel environments. The framework achieved a success rate of 58% for full pick-and-drops, even though the models were not specifically trained for such environments. By refining the queries, decluttering the space, and excluding adversarial objects, the success rate increased to above 82%.

OK-Robot, however, has some limitations. It occasionally fails to match the natural language prompt with the correct object. The grasping model may also fail on certain objects, and the robot hardware has its own limitations. Additionally, the object memory module remains frozen after the initial environment scan, preventing dynamic adaptation to changes in objects and arrangements.

Nevertheless, the OK-Robot project provides valuable insights. It demonstrates the effectiveness of current open-vocabulary vision-language models in identifying objects in the real world and navigating towards them in a zero-shot manner. The findings also highlight the applicability of pre-trained, specialized robot models in open-vocabulary grasping tasks in unseen environments. Furthermore, the successful combination of pre-trained models showcases the potential for zero-shot task performance without additional training.

The OK-Robot framework marks the beginning of a research field with plenty of opportunities for improvement and exploration.