The fine-tuning of large language models (LLMs) has proven to be a valuable tool for businesses looking to tailor AI capabilities to specific tasks and personalized user experiences. However, the computational and financial overhead associated with fine-tuning has limited its use to enterprises with ample resources. To overcome these challenges, researchers at Stanford University and the University of California-Berkeley developed a groundbreaking technique known as S-LoRA, which significantly reduces the costs associated with deploying fine-tuned LLMs.

The Advantages of S-LoRA

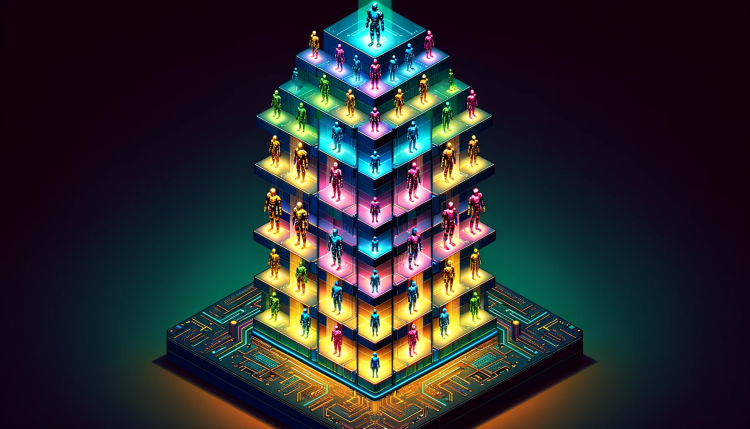

S-LoRA allows companies to run hundreds or even thousands of fine-tuned models on a single graphics processing unit (GPU), unlocking a wide range of new LLM applications. The traditional approach to fine-tuning LLMs involves retraining the model with new examples tailored to a specific task, which demands substantial computational resources due to the billions of parameters involved. Parameter-efficient fine-tuning (PEFT) techniques, such as low-rank adaptation (LoRA), have emerged as efficient alternatives.

“Remarkably, LoRA can reduce the number of trainable parameters by several orders of magnitude while maintaining accuracy levels on par with those achieved through full-parameter fine-tuning.” – Microsoft

LoRA identifies a minimal subset of parameters within the foundational LLM that are adequate for fine-tuning to the new task. This reduces memory and computation requirements, making customization of the model more affordable. The effectiveness of LoRA has led to its widespread adoption within the AI community, with numerous LoRA adapters being developed for pre-trained LLMs and diffusion models.

Managing Multiple LoRA Models

Companies can merge the LoRA weights with the base LLM after fine-tuning, or keep them as separate components plugged into the main model during inference. The latter approach allows for the maintenance of multiple LoRA adapters, each representing a fine-tuned model variant. This modular approach significantly reduces the memory footprint and enables businesses to provide bespoke LLM-driven services without incurring prohibitive costs.

Challenges and Solutions

Deploying multiple LoRA models on a single full-parameter LLM introduces technical challenges. Memory management becomes a primary concern, as GPUs have finite memory and can only load a limited number of adapters alongside the base model. Efficient memory management systems are necessary to ensure smooth operation.

An additional challenge is the batching process used by LLM servers to handle multiple requests concurrently, as the varying sizes of LoRA adapters can lead to memory and computational bottlenecks. These challenges multiply with larger LLMs that require multi-GPU parallel processing. To address these challenges, S-LoRA introduces dynamic memory management and a “Unified Paging” mechanism, which optimizes memory usage and query processing.

S-LoRA incorporates tensor parallelism to keep LoRA adapters compatible with large transformer models that run on multiple GPUs. These advancements enable S-LoRA to serve multiple LoRA adapters on a single GPU or across several GPUs, enhancing both throughput and memory efficiency.

Validation and Future Work

S-LoRA has been evaluated by serving variants of the open-source Llama model from Meta across different GPU setups. The results demonstrate that S-LoRA maintains throughput and memory efficiency at scale. Compared to existing parameter-efficient fine-tuning libraries, S-LoRA showcases a remarkable performance boost, enhancing throughput by up to 30-fold.

S-LoRA’s ability to serve a large number of adapters simultaneously with minimal computational overhead makes it an ideal choice for personalized LLMs. Users can be served with personalized adapters based on their historical data, enhancing the LLM’s response and efficiency. The S-LoRA code is now available on GitHub and will be integrated into popular LLM-serving frameworks, empowering companies to incorporate S-LoRA into their applications effortlessly.

“A service provider may want to serve users with the same base model but different adapters for each. The adapters could be tuned with the users’ history data, for example.” – Ying Sheng, PhD Student at Stanford University

S-LoRA’s compatibility with in-context learning further enhances its versatility. It allows users to be served with personalized adapters while incorporating recent data as context, resulting in more effective and efficient AI interactions.