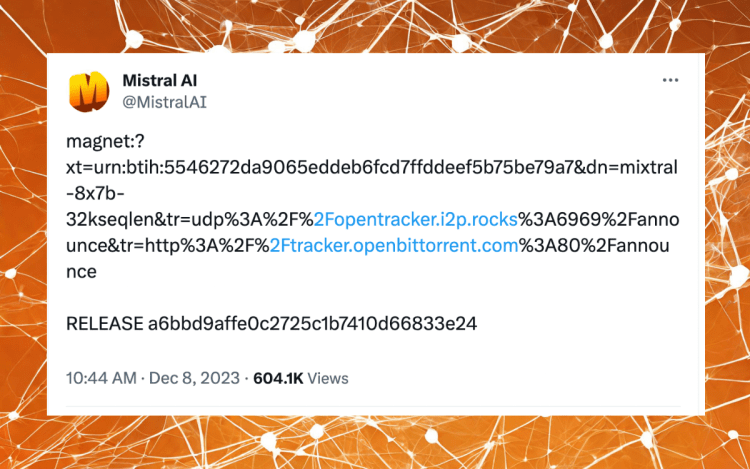

In a surprising move, Mistral AI, an open source model startup, unveiled their newest language AI model, LLM, through a simple and unconventional method. Instead of the usual polished and rehearsed release videos, Mistral AI opted for a minimalistic approach by sharing a torrent link for downloading their model.

This unique strategy quickly caught the attention of the AI community, especially considering the recent release of Google’s Gemini, which was accompanied by a highly produced video. Andrej Karpathy from OpenAI described the Gemini release as “an over-rehearsed professional release video talking about a revolution in AI.”

One particular video from Google’s launch received significant criticism for being edited and staged to appear more advanced than it actually was. In contrast, Mistral AI’s MoE 8x7B model, often referred to as a “scaled-down GPT-4,” was made available for download through a torrent file.

The Mistral LLM is a mixture of experts (MoE) model, consisting of 8 experts with 7 billion parameters. Interestingly, for the inference of each token, only 2 experts are utilized. Speculations based on leaks about GPT-4 suggest that it is an MoE model with 8 experts, each having 111 billion parameters of their own and 55 billion shared attention parameters (166 billion parameters per model). Similarly, GPT-4 only utilizes 2 experts for the inference of each token.

“Mistral was well-known for this kind of release, without any paper, blog, code, or press release,” shared Uri Eliabayev, an AI consultant and founder of the “Machine & Deep Learning Israel” community.

This unconventional release method certainly sparked discussion within the AI community. Jay Scambler, an open source AI advocate, commented that although it was an unusual approach, it effectively generated a significant amount of buzz, which seemed to be the intention behind Mistral AI’s guerrilla move.

“Definitely unusual, but it has generated quite a bit of buzz which I think is the point,” messaged Jay Scambler.

The AI community widely praised this unprecedented release. Notably, entrepreneur George Hotz expressed his support:

“AI moves fast. Mistral AI, now one of my favorite brands in the AI space.”

Having recently secured a $2 billion valuation in a groundbreaking funding round led by Andreessen Horowitz, Mistral AI is a Paris-based startup that has made waves in the industry. They previously set a record with their $118 million seed round, reportedly the largest seed funding in European history. Their first large language AI model, Mistral 7B, was launched in September, establishing their reputation as a significant player in the field.

Mistral AI has also been actively involved in the debate surrounding the EU AI Act. Reports surfaced, indicating that the company was lobbying the European Parliament for less regulation on open source AI, further solidifying their position as a prominent advocate for open source AI.