A new hallucination index developed by the research arm of San Francisco-based Galileo reveals the effectiveness of OpenAI’s GPT-4 model in handling multiple tasks with the least tendency to hallucinate. The index, published today, assessed several open-source and closed-source large language models (LLMs) to determine their performance when faced with different tasks. OpenAI’s offerings consistently outperformed other models in various scenarios, making it a top choice for enterprises. The development of this index addresses the challenge of hallucinations, which have hindered the deployment of LLMs in critical sectors like healthcare.

Assessing Performance and Hallucination Likelihood

To evaluate the performance and hallucination likelihood of the LLMs, Galileo’s team selected eleven popular models of varying sizes. They employed three common tasks: question and answer without retrieval augmented generation (RAG), question and answer with RAG, and long-form text generation. By utilizing comprehensive and rigorous benchmarks, such as TruthfulQA and TriviaQA, the team evaluated the models’ capabilities in handling general inquiries and reasoning abilities within provided context.

The evaluation process involved sub-sampling datasets, establishing ground truth, and using Correctness and Context Adherence metrics developed by Galileo. These metrics enable engineers and data scientists to accurately identify when hallucinations occur. Correctness focuses on general logical and reasoning-based mistakes, while Context Adherence measures an LLM’s ability to reason within provided documents and context.

Top Performers in Different Task Categories

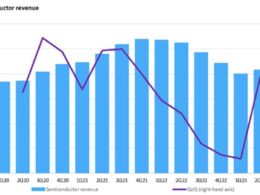

For question and answers without retrieval, where the model relies on internal knowledge, OpenAI’s GPT-4-0613 performed exceptionally well with a correctness score of 0.77. It was followed by GPT-3.5 Turbo-1106, GPT-3.5-Turbo-Instruct, and GPT-3.5-Turbo-0613 with scores of 0.74, 0.70, and 0.70 respectively. Among other models, Meta’s Llama-2-70b achieved a score of 0.65, coming close to the GPT family.

For tasks involving retrieval, GPT-4-0613 maintained its top position with a context adherence score of 0.76. However, GPT-3.5-turbo-0613 and GPT-3.5-turbo-1106 closely matched its performance with scores of 0.75 and 0.74 respectively. Hugging Face’s open-source model Zephyr-7b achieved a score of 0.71, surpassing Meta’s Llama-2-70b (score = 0.68). Models like UAE’s Falcon-40b and Mosaic ML’s MPT-7b had room for improvement, with scores of 0.60 and 0.58 respectively.

In generating long-form texts, such as reports, essays, and articles, GPT-4-0613 and Llama-2-70b exhibited the least tendency to hallucinate with correctness scores of 0.83 and 0.82 respectively. GPT-3.5-Turbo-1106 performed equally well as Llama, while the 0613 variant achieved a score of 0.81. Mosaic ML’s MPT-7b trailed behind with a score of 0.53.

Choosing the Right Model

Although OpenAI’s GPT-4 demonstrated superior performance across tasks, its pricing through the API might be a concern for some teams due to potential cost escalation. Therefore, Galileo recommends closely following GPT-3.5-Turbo models, which offer comparable performance at a lower cost. Alternatively, open-source models like Llama-2-70b can strike a balance between performance and cost.

It’s important to note that this index is a continuously evolving analysis, as new models emerge and existing ones improve. Galileo plans to update the index quarterly to ensure accurate rankings of the least to most hallucinating models for different tasks. The Hallucination Index serves as a comprehensive starting point to aid teams in their generative AI efforts, providing metrics and evaluation methods to assess LLM models effectively.

“We hope the Index serves as an extremely thorough starting point to kick-start their Generative AI efforts. We hope the metrics and evaluation methods covered in the Hallucination Index arm teams with tools to more quickly and effectively evaluate LLM models to find the perfect LLM for their initiative,” said Atindriyo Sanyal, co-founder and CTO of Galileo.