Microsoft Research Releases Phi-2 Small Language Model

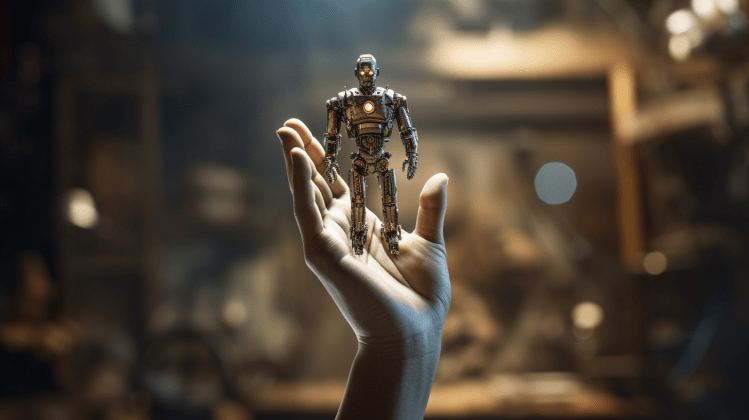

The field of generative AI continues to advance at a rapid pace, as evidenced by the recent announcement from Microsoft Research. The company’s blue sky division has unveiled the Phi-2 small language model (SML), a text-to-text AI program that is designed to be compact but efficient. According to a post on X, Phi-2 is “small enough to run on a laptop or mobile device,” making it highly accessible for various applications.

Despite its compact size, Phi-2 is not lacking in performance. With an impressive 2.7 billion parameters, this model can match the capabilities of larger counterparts such as Meta’s Llama 2-7B and Mistral-7B, both of which boast 7 billion parameters. Even more impressively, Phi-2 outperforms Google’s Gemini Nano 2 model, which has half a billion more parameters, by delivering fewer instances of “toxicity” and bias in its responses compared to Llama 2.

It is worth noting that Microsoft researchers took the opportunity to reference a recent staged demo video released by Google, showcasing the capabilities of its upcoming Gemini Ultra AI model. While the Gemini Ultra is larger and more powerful than Phi-2, the Microsoft team discovered that Phi-2 was able to correctly answer questions and correct students’ mistakes using the same prompts. This finding provides further evidence of Phi-2’s impressive capabilities despite its smaller size.

However, there is a significant limitation to consider with Phi-2 – its licensing. Currently, the model is only licensed for “research purposes only,” and its usage for commercial purposes is strictly prohibited under the custom Microsoft Research License. This restriction means that businesses hoping to leverage Phi-2 to develop commercial products will have to explore alternative options.