In recent years, generative AI has gained significant attention as a powerful tool for creating new and innovative content. However, there is an ongoing debate surrounding the ethical use of data in training AI models. Many leading AI companies, including OpenAI and Meta, have relied on scraping data from the web without prior consent from the creators. While these companies argue that this approach is fair use and legally permissible, it has sparked a growing opposition from artists and authors who claim infringement of their copyright.

A new organization called “Fairly Trained” has emerged in support of creators’ rights. This non-profit, founded and led by CEO Ed Newton-Rex, aims to advocate for obtaining consent from data creators before using their work in AI training. Fairly Trained believes that a path forward for generative AI involves treating creators with respect and licensing training data. According to their website, the organization states, “We firmly believe there is a path forward for generative AI that treats creators with the respect they deserve, and that licensing training data is key to this.”

The Flawed Argument for Publicly Available Data Training

Some argue that training AI on publicly available data is similar to how humans passively observe and learn from art and creative works. However, Newton-Rex challenges this argument, highlighting two key differences. First, AI has the ability to scale exponentially, generating vast amounts of output that can replace the demand for original content. Second, human learning has been part of a social contract where creators expect others to learn from their work. In contrast, AI training lacks this consensus, as creators did not anticipate their work being used to produce competing content at scale. These distinct characteristics of AI training necessitate a different approach that respects creators’ rights.

The Call for Licensing Models

Fairly Trained urges AI companies to adopt a licensing model and obtain permission from creators before using their data. Newton-Rex advises companies that have already trained on publicly posted data to change course and seek licensing agreements with the creators. OpenAI has recently adopted this approach by licensing data from news outlets. While OpenAI continues to defend its use of public data without licensing, Fairly Trained believes that transitioning to a licensing model can create an ecosystem that benefits both creators and AI companies.

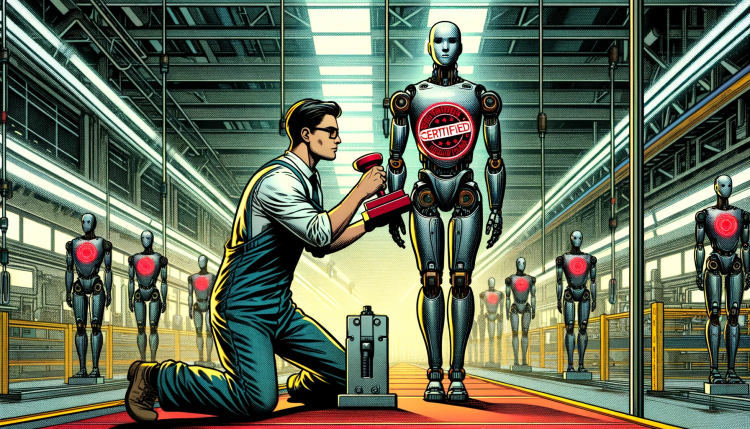

Recognizing that consumers and companies prefer working with AI companies that prioritize consent and respect, Fairly Trained offers the “Licensed Model (L)” certification for AI providers. This certification process involves an online form and a written submission to Fairly Trained. The organization charges fees for this service based on the companies’ annual revenue to cover operational costs.

Already, several AI companies have sought and received the L certification from Fairly Trained. These companies include Beatoven.AI, Boomy, BRIA AI, Endel, LifeScore, Rightsify, Somms.ai, Soundful, and Tuney. This certification verifies that these companies have obtained data through consent and are committed to ethical practices in AI training.

As the generative AI landscape continues to evolve, organizations like Fairly Trained play a crucial role in promoting ethical practices and protecting creators’ rights. With industry experts and support from associations such as the Association of American Publishers and Universal Music Group, Fairly Trained aims to create a more transparent and responsible environment for generative AI.