Amazon Web Services (AWS) Steps Up Its Game in Generative AI

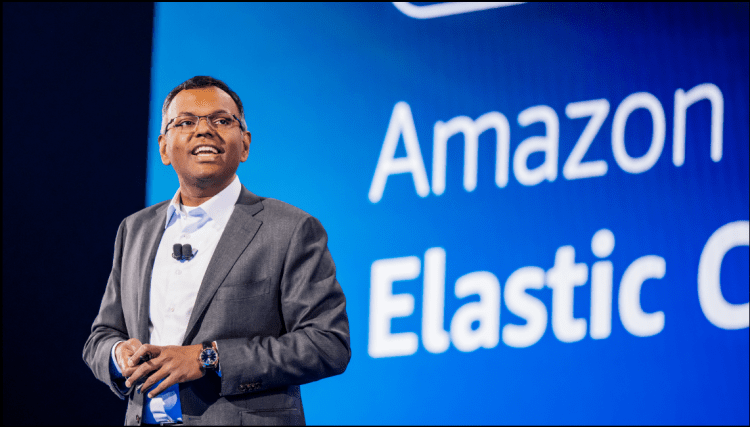

Cloud computing giant Amazon Web Services (AWS) has recently made significant strides in the field of generative artificial intelligence (AI). Previously seen as playing catch-up with rivals Microsoft Azure and Google Cloud, Amazon has now emerged as a leading player in supporting enterprise companies building generative AI projects. At the AWS Re:Invent conference, Swami Sivasubramanian, AWS’s vice president of Data and AI, unveiled a series of groundbreaking announcements that highlight Amazon’s commitment to innovation and customer choice.

Expanding Model Support and Multi-Modal Search

One way Amazon sets itself apart is by offering customers a wide range of options. Through its Bedrock service, AWS supports various leading language models (LLMs), such as Claude, AI21, Cohere, and its own models. To enhance Bedrock’s capabilities, Sivasubramanian introduced improvements and announced the support for Anthropic’s Claude 2.1 model. This model offers an industry-leading 200K token context window, improved accuracy, and fewer hallucinations. Additionally, AWS now supports Meta’s Llama 2, 70B, further demonstrating its commitment to open source.

To meet the demand for multi-modal search capabilities, Amazon has introduced Titan Multi-model Embeddings. This technology enables easy translation of text and other files into numerical representations called vectors. These vectors allow models to understand relationships between words and produce more relevant responses. For instance, a furniture store can now utilize multi-modal search to allow customers to search for a sofa using an image and find visually similar options. This integration of visual and textual information significantly improves the customer experience.

Expanded Text Generation Capabilities and Image Generation with Watermarking

The AWS announcements also included the introduction of new text generation models. Titan TextLite is a lightweight model ideal for tasks like text summarization within chatbots and copywriting. On the other hand, Titan TextExpress excels in open-ended text generation and conversational chat. These models enhance the capabilities of AI-powered virtual assistants and automated chat systems.

Furthermore, Amazon unveiled the Titan Image Generator, which allows users to produce high-quality realistic images by enhancing existing images using simple language prompts. This model can be customized using the user’s own data, making it easy for businesses to align the generated content with their brand. To ensure authenticity and combat disinformation, all images generated by this model receive an invisible watermark by default. These invisible watermarks make tampering and the spread of false information more difficult, setting Amazon’s product apart from competing models.

Improvements in Database Integration and Vector Support

Recognizing the importance of database integration, Amazon has taken significant steps to break down silos between its various databases. This effort aims to facilitate seamless access to proprietary data when utilizing LLMs. By integrating databases like Amazon OpenSearch and Amazon S3, users can analyze and visualize all of their log data in a single location without the need for complex ETL pipelines. Additionally, Amazon has expanded vector search support across multiple databases to cater to the growing demand for ultra-fast and precise searching capabilities.

Empowering Enterprises with Custom Models and Efficient Model Training

To assist enterprise companies in building custom models, AWS established the Gen AI Innovation Center. This center provides expert help and support in areas such as data science and strategy. Starting next year, AWS will offer custom support for building models around Anthropic’s Claude, allowing companies to leverage fine-tuning techniques using their own data.

Additionally, AWS introduced Sagemaker Hyperpod, a tool designed to simplify the challenging process of training foundation models. With access to the latest GPU clusters, Hyperpod significantly reduces model training time, enhancing overall efficiency. AWS continues to invest in Sagemaker with a range of features across inference, training, and MLOps.

Other Announcements

AWS’s announcements extended beyond the aforementioned highlights. Some of the additional key updates include:

- Model Evaluation on Amazon Bedrock in preview mode: This feature allows companies to evaluate and select the best foundation model for their specific use cases.

- Introduction of the RAG DIY app: This app showcases the power of generative AI agents by enabling users to accomplish complex tasks through natural language queries. With the integration of LLMs and various APIs, users can receive customized recommendations and relevant information.

- Launch of the Gen AI Innovation Center: This center provides expert guidance and support to enterprises interested in building custom models, with a particular focus on Anthropic’s Claude models.

- Significant database integration, including vector support: Amazon expands its database integration efforts, allowing vector data to be stored and queried across multiple databases, enhancing search capabilities.

- Combining Neptune Analytics with vector search: By combining graph analytics with vector search, AWS enables users to uncover hidden relationships and insights within data more effectively, making LLMs even more powerful.

- Sharing cleanroom data for machine learning: AWS introduces AWS Clean Rooms ML, allowing customers to share data securely and leverage third-party ML models for predictive insights.

- Amazon Q for generative SQL: Amazon Q, an AI-powered assistant, now supports SQL queries in Redshift, providing users with customized recommendations based on natural language prompts.

- Expansion of Sagemaker features: AWS introduces a range of features to enhance Sagemaker’s capabilities across inference, training, and MLOps.

- Preview of Redis database with vector search: AWS previews vector search capabilities for the DB for Redis, catering to the security and speed needs of large companies.