It was almost impossible not to be captivated by the vibrant and exciting atmosphere of Meta’s Connect developer and creator conference. Held in person for the first time since the pandemic, the event emanated a Disneyland-like vibe at Meta headquarters in Menlo Park, California.

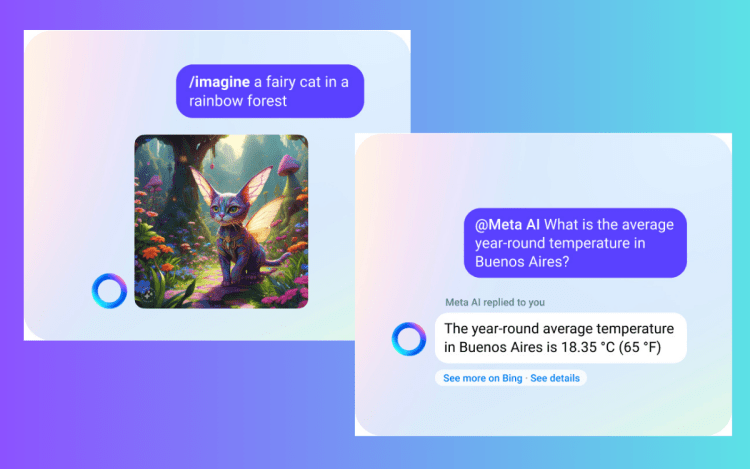

Hearing the applause from the crowd every time Mark Zuckerberg unveiled another fascinating AI-driven product was reminiscent of the awe one experiences as a child watching an orca show at Sea World. The interactive elements of Meta’s AI offerings were truly impressive, from AI stickers and characters to an AI image generator of Mark Zuckerberg’s dog. The demonstrations were enough to spark anyone’s curiosity and leave them wanting more.

Exciting AI Innovations by Meta

The AI products showcased by Meta at the conference were quite enticing. For instance, the idea of engaging with Snoop Dogg as a dungeon master on Facebook, Instagram, or WhatsApp sounds incredibly fun. Additionally, the introduction of Ray-Ban Smart Glasses with built-in voice AI chat is undeniably appealing. And who wouldn’t appreciate AI-curated restaurant recommendations in their group chat? These innovations have the potential to make our lives more convenient and enjoyable.

However, Meta’s playful approach to AI, even in tools intended for business and brand use, comes at a time when the rapid release of AI products by Big Tech giants is raising concerns about security, privacy, and hubris.

Concerns over AI Product Releases

Recent AI product releases by companies like Amazon and Microsoft have put the spotlight on the potential risks associated with these technological advancements. Last week, Amazon Alexa and Microsoft’s Copilot announcements left some questioning the underlying security and privacy measures of these products.

Google’s update of Bard drew mixed reviews, and another feature of Bard came under scrutiny. Google Search began indexing shared Bard conversational links, potentially exposing private conversations to the public. This revelation triggered a wave of concern on social media, prompting Google to address the issue and reassure users of its commitment to privacy.

“Bard allows people to share chats, if they choose,” said Danny Sullivan, Google’s public liaison for search. “We also don’t intend for these shared chats to be indexed by Google Search. We’re working on blocking them from being indexed now.”

While measures are being taken to rectify the situation, it remains to be seen whether users will regain their trust in Bard.

Another concerning moment occurred during OpenAI CEO Sam Altman’s announcement of ChatGPT’s new voice mode and vision on X. Lilian Weng, head of safety systems at OpenAI, discussed her “therapy” session with ChatGPT on Twitter. However, her comments received pushback due to her lack of mental health expertise, raising concerns about the responsible use of AI in therapy.

“In the future, we will have ‘wildly effective’ and ‘dirt cheap AI therapy’ that will ‘lead to a radical improvement in people’s experience of life,’ ” said Ilya Sutskever, OpenAI’s cofounder and chief scientist.

While it is possible that AI tools could be used for emotional support, promoting them as therapy raises ethical questions. The implications of such usage need to be thoroughly examined to ensure the well-being of individuals who may rely on these tools.

All the buzz surrounding Meta’s AI announcements points to a significant milestone in bringing generative AI to the mainstream. With the integration of AI chat in Facebook, AI-generated images in Instagram, and the ability to share AI chats, stickers, and photos in WhatsApp, the number of generative AI users is projected to soar into the billions.

Considering the fast-paced deployment of AI products by Big Tech companies, it is crucial to comprehend the profound implications of such wide-scale adoption. We must not overlook the potential risks and challenges that come with this new AI-driven era.

Meta, however, is committed to building generative AI features responsibly. They have emphasized the implementation of safeguards, continuous improvement of features, and collaboration with various stakeholders, including governments, AI experts, and privacy advocates, to establish responsible guidelines.

Yet, as with any new technology, the true consequences of product rollouts remain to be seen. We are all essentially part of a vast experiment, as generative AI products and features are embraced on a global scale.

As billions of people explore the latest AI tools from Meta, Amazon, Google, and Microsoft, it is likely that more challenges and failures, similar to those observed in Bard, will arise. Let’s hope that any repercussions are minimal and not more than, say, a simple chat with Snoop Dogg, the Dungeon Master, gone awry.