Last week, Mark Zuckerberg’s remarks about Meta’s AI strategy sparked interest in the company’s massive internal dataset for training its Llama models. The dataset consists of hundreds of billions of publicly shared images and tens of billions of public videos from Facebook and Instagram. This dataset, according to Zuckerbook, surpasses the size of the Common Crawl dataset. However, the significance of data in AI models goes beyond just training. It is the ongoing inference and utilization of data by large companies that are fueling the voracious appetite of AI models.

Understanding the Role of Inference in AI

“[Inference is] the bigger market, I don’t think people realize that,”

– Brad Schneider, founder and CEO of Nomad Data

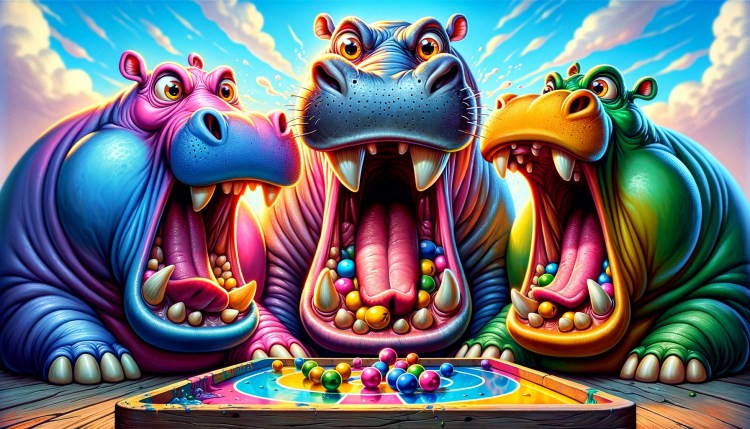

Inference refers to the process of running live data through a trained AI model to make predictions or solve tasks. It is a continuous requirement for large companies that utilize Llama APIs, which deploy Llama models for various use cases. The demand for inference is turning AI models into the equivalent of Hasbro’s “Hungry Hungry Hippos” game, voraciously consuming data marbles to sustain their functionality.

Nomad Data, a New York City-based company founded in 2020, recognizes the importance of inference in the AI landscape. Instead of serving as a data broker, Nomad offers data discovery services. They have developed their Llama models to match data buyers with over 2,500 data vendors. Nomad’s approach allows companies to search for specific types of data in natural language, rather than relying on predefined data sets.

“That’s sort of the magic.”

– Brad Schneider, founder and CEO of Nomad Data

The ability to match data demand with supply in real-time creates a seamless process for companies seeking highly specific datasets for their Llama inference use cases. Nomad’s Llama models and natural language processing capabilities enable efficient data matching and exploration.

Customized Training and the Value of External Data

While training data is important for AI models, inference requires continuous feeding of live data for interesting outcomes. According to Schneider, finding the right data to “feed” the model has been a challenge. Large enterprise companies typically start with internal data. However, incorporating external text data, which was often buried and deemed difficult to access, has been a complex endeavor.

Fortunately, Llama models can now derive inferences based on external textual data in a matter of seconds, unlocking valuable insights from previously overlooked sources. Schneider likens this abundance of textual data to buried treasure that has now become essential and valuable for AI applications.

Customized training is another important aspect of Llama models. Specific datasets are required to train models for distinct purposes, such as recognizing Japanese receipts or identifying advertisements in pictures of football fields. Nomad Data has observed an increasing number of corporations, including media companies and automotive manufacturers, offering their data on the platform for customized training purposes.

“Now I am going to buy data that I had no value for before, that’s going to be instrumental in building my business because this new technology allows me to use it.”

– Brad Schneider, founder and CEO of Nomad Data

The continuous cycle of data supply and demand in the Llama hunger chain is driven by the importance of inference and the varied requirements for customized training. Llama models play a crucial role in enabling businesses to leverage textual data effectively and unlock its potential for growth and innovation.