The Changing Perspective on Artificial General Intelligence (AGI)

Two months after being fired by the OpenAI nonprofit board (only to be rehired a few days later), CEO Sam Altman has displayed a noticeably softened — and far less prophetic — tone at the World Economic Forum in Davos, Switzerland about the idea that powerful artificial general intelligence (AGI) will arrive suddenly and radically disrupt society.

The Evolving Understanding of AGI

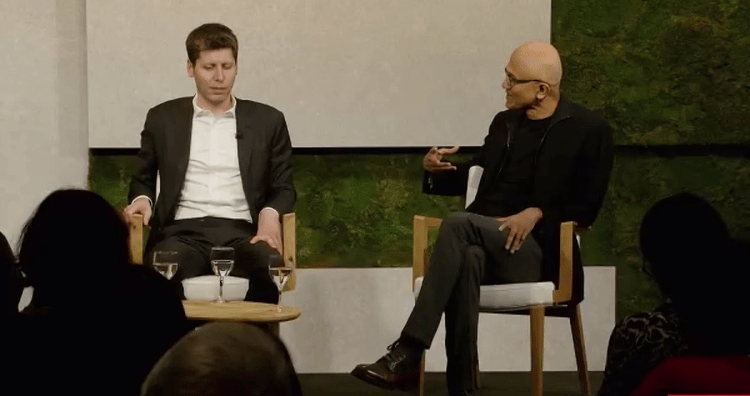

In a conversation with Microsoft CEO Satya Nadella and The Economist editor-in-chief Zanny Minton Beddoes, Altman stated, “I don’t think anybody agrees anymore what AGI means.” He further added, “When we reach AGI, the world will freak out for two weeks and then humans will go back to do human things.” Altman emphasized that AGI will be a “surprisingly continuous thing” and that every year, a new model will be released that is significantly better than the year before.

Altman’s revised stance aligns with comments he made during a conversation organized by Bloomberg, where he stated that AGI could be developed in the “reasonably close-ish future” but would have a lesser impact on society and jobs than anticipated.

“A misaligned superintelligent AGI could cause grievous harm to the world; an autocratic regime with a decisive superintelligence lead could do that too.” – Sam Altman

Altman expressed the importance of adjusting incrementally to the progress of powerful AI, expecting it to accelerate global advancements while acknowledging the potential risks. His blog post titled “Planning for AGI and Beyond” showcased both the transformative potential and the need for caution when dealing with AGI.

“If AGI is successfully created, this technology could help us elevate humanity by increasing abundance, turbocharging the global economy, and aiding in the discovery of new scientific knowledge that changes the limits of possibility.” – Sam Altman

Despite his revised views, Altman remains intrigued by the potential of AGI, noting that AI could possess superhuman persuasion abilities before achieving general intelligence.

Changing Dynamics at OpenAI

Altman’s altered perspective on AGI emerges at a significant time, coinciding with the dissolution of OpenAI’s board and subsequent changes in leadership. OpenAI’s nonprofit board, which held the responsibility of determining when AGI had been attained, underwent significant restructuring. Former board members with expertise in mitigating AGI risks were replaced by individuals associated with technology and finance.

This restructuring raises questions about OpenAI’s direction and the influence of commercial interests following AGI achievement. The tension between commercialization and the mission to prevent catastrophic risks from AGI remains at the forefront.

“You’re not worried that a future OpenAI board could suddenly say we’ve reached a jam? You’ve had some surprises from the OpenAI board.” – Zanny Minton Beddoes

However, Altman assured that the OpenAI charter allows for reconsideration and adaptation as situations arise, highlighting the uncertainty surrounding the future of AI and AGI.

Microsoft’s Nadella acknowledged the need for responsible partnerships between organizations to ensure the benefits of AGI are maximized, and any unintended consequences are minimized. He emphasized that governments and society should have a say in the development and deployment of AGI.

“The world is very different… Governments of the world are interested, civic society is interested, they are going to have a say.” – Satya Nadella

Altman, reflecting on the November drama that led to his temporary firing, humorously expressed his commitment to learning lessons early when the stakes are relatively low.