Nvidia is partnering with Microsoft to enhance its co-sell strategy. During the Ignite conference, Nvidia announced the launch of an AI foundry service on Azure, Microsoft’s cloud platform. This service will enable enterprises and startups to build custom AI applications on the Azure cloud, including those that can utilize enterprise data with retrieval augmented generation (RAG). According to Jensen Huang, the founder and CEO of Nvidia, the AI foundry service combines the company’s generative AI model technologies, LLM training expertise, and giant-scale AI factory. Huang emphasized that the service was built on Microsoft Azure to allow global enterprises to connect their custom models with Microsoft’s leading cloud services.

In addition to the AI foundry service, Nvidia revealed the introduction of new 8-billion parameter models. These models are part of the foundry service and will provide enhanced capabilities for AI applications. Furthermore, Nvidia plans to integrate its next-generation GPU into Microsoft Azure in the near future, further strengthening the partnership between the two companies.

Nvidia AI Foundry Service on Azure

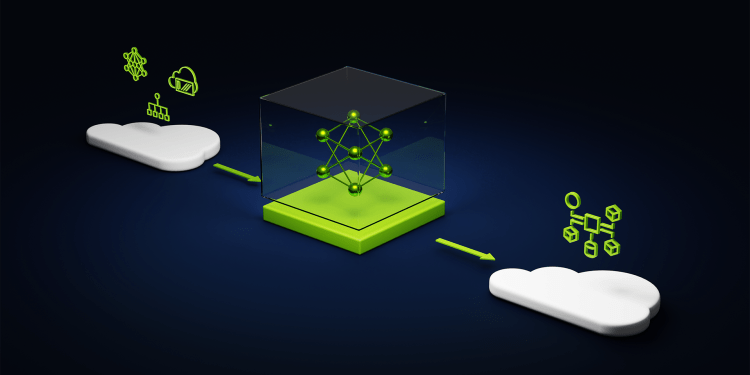

Nvidia’s AI foundry service on Azure offers enterprises using the cloud platform all the essential components required to build a custom generative AI application focused on their business needs. The service encompasses Nvidia AI foundation models, the NeMo framework, and the Nvidia DGX cloud supercomputing service. Manuvir Das, the VP of enterprise computing at Nvidia, highlighted that this comprehensive offering allows customers to go through the entire enterprise generative AI workflow using Nvidia technology directly within Azure. The collaboration between Nvidia and Microsoft enables a seamless co-sell experience for users.

To provide a wide range of foundation models for enterprises using the foundry service, Nvidia is introducing the Nemotron-3 8B models. These models support the development of advanced enterprise chat and Q&A applications across industries such as healthcare, telecommunications, and financial services. They have multilingual capabilities and will be available through the Azure AI model catalog, Hugging Face, and the Nvidia NGC catalog. Other community foundation models, including Llama 2, Stable Diffusion XL, and Mistral 7b, are also part of the Nvidia catalog.

Once users have access to their chosen model, they can proceed to the training and deployment stage using Nvidia DGX Cloud and AI Enterprise software, available through the Azure marketplace. The DGX Cloud offers scalable instances with thousands of NVIDIA Tensor Core GPUs for training and includes the AI Enterprise toolkit, which incorporates the NeMo framework and Nvidia Triton Inference Server. These tools enhance the speed and customization of using the LLM (large language models).

Expansion of Partnership and Hardware Integration

In addition to the AI foundry service, Microsoft and Nvidia have expanded their partnership to include the chipmaker’s latest hardware. Microsoft introduced new NC H100 v5 virtual machines for Azure, which are the industry’s first cloud instances featuring a pair of PCIe-based H100 GPUs connected via Nvidia NVLink. These instances offer enhanced AI compute performance and memory capacity, making them ideal for inference and training workloads. Furthermore, Microsoft plans to integrate the new Nvidia H200 Tensor Core GPU into its Azure fleet in the coming year, providing users with multiple options for running AI workloads.

Nvidia also announced updates to accelerate LLM work on Windows devices. These updates include an update for TensorRT LLM for Windows that supports new large language models and delivers five times faster inference performance. The update is compatible with OpenAI’s Chat API, enabling developers to run their projects and applications on Windows 11 PCs with Nvidia GeForce RTX 30 Series and 40 Series GPUs.